The Decentralized Web Movement

Over the years computers grew in numbers and a logical step in their evolution was to connect them together to allow their users to share things. Little networks grew into huge networks and some computers gained more power than the rest: they called themselves “servers”. Today millions of people are connected online at the mercy of middleman who control the servers of the world.

This is not an introduction to an dystopian fantasy world but an excerpt from a promotion video for Opera Unite, a framework that allows users to host information from their home computer. It was a bold attempt to change the centralized architecture of the Internet. A number of smart people have been pondering this idea even before Opera’s experiment failed miserably.

And the concept of a decentralized web is gaining traction: more and more people realize something has to change. The cause for this trend is obvious: the number of data security and privacy disasters that were made public has spiked in recent times . In April ’11 for example an update to the security terms of service of the widely used Dropbox tool revealed that contrary to previous claims, Dropbox Inc. has full access to user data.

An analysis of the changes to the Facebook privacy policy over time paints a gloomy picture of how the world’s largest social network changed “from a private communication space to a platform that shares user information with advertising and business partners while limiting the users’ options to control their own information”.

With more and more of our personal data moving to centralized servers or “cloud services” – a term that should be used as an euphemism – we’re no longer in control. But there is hope in sight: there are dozens of projects out there that try to stop the trend of centralization and data consolidation.

Decentralized Applications

The most popular of the lot is probably Diaspora. The project got a lot of attention in April 2010 when they managed to raise about $200.000 from almost 6500 supporters. The software looks and feels very much like Facebook or Google+. The innovation is that users are allowed and even encouraged to set up their own Diaspora node. This essentially means allowing users to set up their own Facebook server at home (or wherever they want). The Diaspora nodes are able to interact with each other to form one distributed social network. Furthermore, instead of users having to log in to one central server, they may choose one of many servers administered by different entities. In the end they can decide whom to trust with their data and there is no one entity that has access to all the data.

A social network project that is also worth mentioning follows the same principle. Its name is Buddycloud. The main difference between Buddycloud and Diaspora can be found in their implementation details: Buddycloud builds upon XMPP (Extensible Messaging and Presence Protocol), a more than 10 year old and often implemented specification for “near-real-time, extensible instant messaging, presence information, and contact list maintenance”. There are many unknowns in this area so building on such proven protocols instead of defining new standards might proof to be an advantage. But there are many more social networking projects out there. Wikipedia has a nice list.

The Unhosted project implements another concept. Instead of providing a specific decentralized service it aims to be a meta-service. And after talking to Michiel de Jong I have the impression his plan is even more crucial. He aims to create something fundamental, a protocol, an architecture, a new way of writing web applications. The idea is the following: the traditional architecture of a hosted website provides both processing and storage. An unhosted website only hosts the application, not the data. Unhosted wants to separate the application from the data. By storing the data in another location and combining both application and data only in the browser, the application provider can never access the data. An ingenious and very ambitious idea. I hope they succeed!

Decentralized Storage

A project that aims to replace Dropbox is ownCloud, an open personal cloud which runs on your personal server. It enables accessing your data from all of your devices. Sharing with other people is also possible. It supports automatic backups, versioning and encryption.

The Locker Project has similar goals. They allow self-hosting (installing their software on your own server) and offer a hosted service similar to what Dropbox provides. The service pulls in and archives all kinds of data that the user has permission to access and stores this data into the user’s personal Locker: Tweets, photos, videos, click-streams, check-ins, data from real-world sensors like heart monitors, health records and financial records like transaction histories (source).

A third project worth mentioning is sparkleshare. It is similar to the other projects in this category but allows pluggable backends. That means you can choose to use for example Github as backend for your data or of course your personal server. Awesome!

Freedom to the Networks

Projects such as netless carry the idea even further because after the data is liberated, the connection itself is a soft spot. Network connections should be liberated from corporate and government control by circumventing the big centralized data hubs and instead installing a decentralized wireless mesh network where everyone can participate and communicate.

The adventurous netless project plans to use the city transportation grid as its data backbone. Nodes of the network are attached to city vehicles – trams, buses, taxis and possibly – pedestrians. Information exchange between the nodes happens only when the carriers pass by each other in the city traffic. Digital data switches its routes just the same way you’d switch from tram number 2 to bus number 5. Very inspiring.

Another idea is to utilize networks of mobile phones to create a mesh network. The serval project is working on this. And they have a prototype for the Android platform ready.

The German Freifunk community pursues a similar goal. It is a non-commercial open initiative to support free radio networks in the German region. It is part of the international movement for free and wireless radio networks (source).

A purely software based project is Tor. It is free software and an open network that helps its users to defend against a form of network surveillance that threatens personal freedom and privacy as well as confidential business activities and relationships.

Peer to Peer Currency

One integral thing this article did not talk about yet is money. Bitcoin, a peer to peer currency, might be the missing puzzle piece. The Bitcoin system has no central authority that issues new money or tracks transactions – it is managed collectively by the network.

A major problem of digital currency has been preventing double-spending. Digital money can be copied multiple times so a mechanism is necessary to forbid spending money twice. Bitcoin refrains from having actual digital coins. The system is merely one large transaction log that tracks what money was transferred where.

Each participant has a pair of public and private keys to sign transactions and to allow others to verify transactions. The transactions are entered into a global ever running log that is signed in regular intervals. The signing of the log is designed to require extensive computation time. The entire network of participating users is required to sign the log.

This protects the entire system from false signatures and from anyone tempering with the log and modifying past transactions. An attacker would have to have more computational power at his disposal than the entire Bitcoin network to forge transactions.

Users that give their computing time to the network are rewarded with Bitcoins for their troubles. This is also how the money is generated in the first place. In addition, participants that transfer money are free to include a transaction fee in their order. This extra money is given to the particular user signing the transaction.

A considerable number of sites have emerged that accept Bitcoins in exchange for services or goods. You can buy for example socks online or even pay for your lunch at a burger restaurant in Berlin.

Conclusion

In closing, I find it encouraging, that so many people feel that things have to change and are developing ideas and projects to make it happen. We will see many exciting things in the future and despite the overwhelming might of well-established products, I am hopeful.

Chaos Communication Camp

I just returned from a world of distorted day-night-rhythm, caffeine, camping, data toilets, colorful lights and most importantly ingenious people and projects. There were some great talks and many great hackerspaces from Germany and around the world were attending.

Every attendee at the conference received a r0cket badge when arriving at the campground. It’s a “full featured microcontroller development board” including a 32-bit ARM Cortex-M3 LPC1343 microcontroller, a 96×68 monochrome LCD and 2.4GHz transceiver for mesh networking.

There was a DECT and GSM phone network setup.

HAM-radio enthusiasts from Metalab were working on Moonbounce: radio communication over a distance of at least 2 light-seconds using the Moon as reflector.

A couple of microcopter and drone projects presented their work. There was for example the NG-UAVP project. They released a couple of great areal videos. A project I did not know but I find particularly fascinating is the Paparazzi project. It is an “exceptionally powerful and versatile autopilot system for fixedwing aircrafts as well as multicopters”. This is a project that is for example used by Scientists from the Finnish Meteorological Institute to measure temperature, humidity, pressure, wind direction and speed in altitudes up to 1000m at Antarctica. Very impressive!

For me personally, meeting a number of interesting people that are part of the decentralized web movement was really great. Because of some truly enlightening conversation with people from the FreedomBox Foundation, Michiel de Jong from unhosted.org and buddycloud developers I started thinking about the whole topic again especially about host-proof applications and what a host-proof social network could look like.

Too bad this event is not every year. I had such a great time I would love to go again soon!

Nvidia Driver Version on Linux

How to get the version of the Nvidia kernel module on Linux? I’m posting this here because I’m sure I will forget this again. This is very relevant when evaluating that the proper driver is installed for CUDA use.

cat /proc/driver/nvidia/version

On my system this returns:

NVRM version: NVIDIA UNIX x86_64 Kernel Module 270.41.19 GCC version: gcc version 4.4.3 (Ubuntu 4.4.3-4ubuntu5)

Talks Page

I added a Talks-page to this blog. I plan to list and publish interesting talks I gave at various occasions. Currently the page lists two talks: a GPGPU seminar I gave a year ago at my old university and just recently I had the honor of speaking at BoostCon about the project I worked on last year: a library for the Cell processor.

Stallman was right

“Stallman was right” is one of the important messages Eben Moglen conveys in his recent talks about Freedom and the Cloud. He’s a convincing speaker, his arguments are striking and the cause might very well be one of the most important challenges the Internet faces right now.

He speaks about privacy: the freedom to choose who to share what information with. He speaks about freedom of speech: the liberty to speak and communicate freely without censorship. He speaks about how we give up those freedoms, often without noticing or worse, without caring. By signing up to facebook. By using Gmail.

It’s insane if you think about it. We communicate with our friends, send them private messages, through a database in Northern California. We store our personal information including images in that same database. And while we put more and more information, more and more of our lives in that database, its owners are busy thinking about ways of how to make money with our data. By now I’m sure they have come to at least one conclusion: to make money they will share our data with entities we never intended to share it with.

It gets worse, and Eben Moglen elaborates this very well in his keynote speech at Fosdem 2011. All our data is routed through and even stored in a company’s database. We can choose to trust that particular company to not do anything bad with our data. But we can never be sure that the company will act in our interest if it is offered incentives (“We won’t shut you down if you comply”) to share certain data with certain government agencies. Since our data is stored in a centralized manner, agencies the likes of NSA would have a field day.

So indeed, Stallman was right when he called cloud computing a trap. He advises us “to stay local and stick with our own computers”.

Eben Moglen sees the urgency of these problems so he is taking action: informing the public with persuasive talks and gathering smart, ingenious and influential people to find solutions under the newly founded FreedomBox Foundation. The ingredients to the solution, in accordance to Stallman’s suggestion have been identified:

- cheap, compact plug computers that can be used as personal servers

- a free (as in freedom) software stack that is designed to respect and preserve the user’s privacy

- mesh networking technology to keep the network alive if centralized internet connections fail

The idea is simple: replace all cloud services (social network, e-mail, photo storage etc) with distributed systems. The FreedomBox project aims to provide everyone with a compact, cheap, low-power plug computer. Such a device will come preloaded with free software that “knows how to securely contact your friends and business associates, is able to store and securely backup your personal data and generally maintaining your presence in the various networks you have come to rely on for communicating with people in society”.

There are a number of other projects out there that are worth mentioning here. A very interesting assessment of the current state of the social web and existing standards is the W3C Social Web Incubator Group report. The Diaspora project develops distributed and secure social networking software and is worth keeping an eye on. The FreedomBox community maintains a comprehensive list of existing projects.

I expect the foundation to develop a software package that provides stable system software and that encompasses a number of existing projects that allow users to move from cloud services to their own server. I will help were I can and so should you!

C++ Workspace Class

Oftentimes algorithms require buffers for communication or need to store intermediate results. Or they need to pre-compute and store auxiliary data. Those algorithms are often called in loops with similar or identical parameters but different data. Creating required resources an algorithm needs in every invocation is inefficient and can be a performance bottleneck. I would like to give users a means of reusing objects across multiple calls to algorithms.

The basic idea is to have a generic class that either creates the required objects for you or returns an existing object, if you already created it. Inefficient code like this:

algorithm(T1 input, T2 output, parameters)

{

buffer mybuff(buffer_parameters);

calculation(input, output, buffer);

// buffer goes out of scope and is destroyed

}

for() algorithm(input, output, parameters);

creates a buffer object in each invocation of algorithm. This is oftentimes unnecessary. The buffer object could be reused in subsequent calls which would result in code such as:

algorithm(T1 input, T2 output, parameters, workspace & ws)

{

buffer & mybuff = ws.get(name, buffer_parameters);

calculation(input, output, buffer);

// buffer is not destroyed

}

{

workspace ws;

for() algorithm(input, output, parameters, ws);

} // workspace goes out of scope; all objects are destoryed

The name in the above code acts as an object identifier. If the same object should be used a second time it has to be accessed using the same object identifier.

More Features

Specifying only the name of an object as its identifier might not be enough. The following code could be ambiguous and might result in an error:

buffer & mybuff = ws.get("mybuff", size(1024)); // later call buffer & mybuff = ws.get ("mybuff", size(2048));

When using mybuff after the later call, it might be expected that the buffer has a size of 2048. But the workspace object returns the object created before which is smaller. A simple workaround would be to always resize the buffer before it is used.

As an alternative the workspace object can be instructed to not only consider the name as an object identifier when checking if the object already exists but also some parameter of the constructor. Wrapping parameters with the “arg” method marks them to be considered when resolving existing objects.

buffer & mybuff = ws.get("mybuff", workspace::arg(size(1024))); // later call buffer & mybuff = ws.get ("mybuff", workspace::arg(size(2048)));

This code will create two different buffer objects.

Implementation

The workspace holds a map of pointers to the objects it manages. The map’s key is an arbitrary name for the resource. In addition to the pointer, a functor that properly destroys the object referenced by the pointer is stored.

A call to get<T> will check if an object with ‘name’ already exists. If not, an object is created using the parameters (as well as a function object to destroy it). If it exists a reference is returned.

If the workspace goes out of scope, the destructor iterates over all object the workspace holds and destroys them in reverse order of creation.

I used some of the nifty new C++0x language features (variadic templates, std::function and the lambda functions) to implement the workspace class. I thought this was a good idea since the Final Draft International Standard was approved recently. I found the features to be very powerful as well as incredibly useful.

The argument dependent lookup is implemented in a crude way: the arguments are appended to the name string as characters. This should be sufficient for basic use and works well for all built-in types.

Arguments that are supposed to be added to the object identifier are detected as they are wrapped in a special_arg class. Partial template specialization and the unpack operator help appending the arguments to the object identifier.

The current implementation can be found in my workspace git repository.

Discussion

It might very well be that something like this already exists in a more elegant form and that I have simply not been aware of or failed to identify as a solution to this problem. I’m for example aware of pool allocators but I believe that I propose a more abstract concept. Buffers that are part of a workspace still could use a pool allocator.

Also the code in the repository is written rather quickly and I’m quite sure there are some rough edges where the code is not as efficient as it could be (passing by reference comes to mind).

Technology and Art

Oftentimes the merger of technology and art produces wonderful and fascinating things. It is maybe the kind of art I personally enjoy most since I can easily relate to it. Here are some examples I recently discovered:

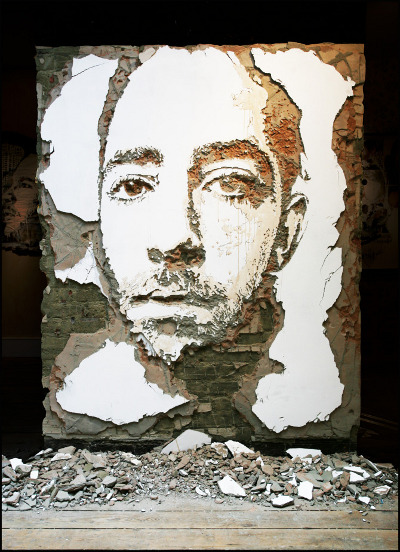

Alexandre Farto is “Vhils”, a Portuguese-born artist who amongst other things carves faces into wall. This alone, while really great, does not really merge technology and art. In this video one can guess how the artist works.

But this video depicts Vhils’ art in an even cooler light: it gives the illusion, that the artwork is literally blasted in the wall. Truly amazing.

I discovered this through a “hack a day” article. The article does not say much about how it is done. There’s a lively discussion in the comment section of article.

People seem to agree, that the artist in some cases carves the sculpture in the wall, covers the work and later unmasks it. He might use rubberized explosive: flat sheets of solid but flexible material that can be cut to specific shape, bent around solid surfaces and glued or taped in place (Source: Wikipedia).

I have to agree with reader’s opinions that is is rather unlikely that these are controlled explosions and that the artists creates his work through designing the explosions. The work is nonetheless impressive. You can find more images like this an other works on the artist’s website.

The merger of art and technology is more apparent in the work of generative artists such as Michael Hansmeyer. In the work presented here, the artist designs columns using subdivision processes. He feeds his algorithm parameters that result in a distinct local variance in the patterns that are generated. This results in columns with millions of faces (image on the left).

The artists calls his work Computational Architecture. But generating a column model is only the first step of his process. In a second step the columns are actually produced as a layered model using 1mm sheet. That is 2700 sheets for a 2.7 meter high column. Cutting paths are calculated by intersecting the column with a plane and sheets are cut using a mill or a laser. The image on the right shows part of a fabricated column. I guess the color is a result of the fabrication process.

Read more about the artist’s process here including a video of a rendered column. More column images can be found here.

Good? Music?

I added an “art” and “music” section to the blog. I’d like to share two albums I currently enjoy a lot and listen to almost exclusively. There’s “Black Rainbow” by Aucan and “Peanut Butter Blues & Melancholy Jam” by Ghostpoet. Two very different but ingenious albums.

My favorite track from the Aucan album is “Heartless”. It’s really incredible. Listen to it here:

From the Ghostpoet album I currently most enjoy “Run Run Run” but I expect this to change over time since it’s an album that grows on you and that you have to listen a couple of times to really appreciate it. I don’t want to go into why I like these albums, others are light-years better at music critique than I am. I just want to say: it’s really good music. You should check it out.

A simple lock-free queue in C++

A while ago I needed a simple lock-free queue in C++ that supports a single producer as well as one consumer. The reason to use a lock-free data structure instead of a regular std::queue guarded by mutex and condition variable for me was to avoid the overhead of exactly those mechanisms. Also since I never came in contact with the interesting concept of lock-free data types, I thought this was an excellent opportunity to look into the matter.

Unfortunately (or fortunately) I could not find a production ready lock-free queue in C++ for my platform (Linux). There is the concurrent_queue class available in Visual Studio 2010, there’s the unofficial Boost.Lockfree library (code is here) but those options were not satisfying. I needed something simple that worked right out of the box.

Herb Sutter wrote an excellent article a while back about designing a lock-free queue. It’s straight forward and functional. The only flaw it has from my point of view is the use of C++0x atomics which I wanted to refrain from including in my project for now. There’s of course the unofficial Boost.Atomic library, but again I wanted something simple.

So I reread Herbs article, especially the passage that explains the requirements for a “lock-free-safe” variable: atomicity, order and the availability of a compare and swap operation. Digging into the Boost.Atomic code I found that it is rather simple to get atomicity and order when using gcc. I’m using version 4.4.3.

Atomicity can be ensured using the atomic functions of gcc and order can be ensured using a memory barrier trick:

type __sync_lock_test_and_set (type *ptr, type value)

asm volatile("" ::: "memory")

The first line is an atomic test and set operation and the second line is equivalent to a full memory barrier. With this functionality I was all set. Here is the modified code for the method the producer calls:

void produce(const T& t)

{

last->next = new Node(t); // add the new item

asm volatile("" ::: "memory"); // memory barrier

(void)__sync_lock_test_and_set(&last, last->next);

while(first != divider) // trim unused nodes

{

Node* tmp = first;

first = first->next;

delete tmp;

}

}

I replaced the atomic members with regular pointers but exchanged the assignments that require atomicity and order by calls to __sync_lock_test_and_set preceded by the full memory barrier trick. The function call must be cast to void to prevent a “value computed is not used” warning.

bool consume(T& result)

{

if(divider != last) // if queue is nonempty

{

result = divider->next->value; // C: copy it back

asm volatile("" ::: "memory"); // memory barrier

(void)__sync_lock_test_and_set(÷r, divider->next);

return true; // report success

}

return false; // else report empty

}

The full class can be downloaded here. Please note that the class lacks some important functionality if your use-case is non-trivial (a proper copy-constructor comes to mind).

What it is all about

There is a collection of definitions of lock-free and non-blocking and wait-free algorithms and data structures that can be interesting to read.

The idea of lock-free data structures is that they are written in a way that allows simultaneous access by multiple threads without the use of critical sections. They must be defined in a way that at any point in time any thread that has access to the data sees a well defined state of the structure. A modification was either done or not done, there is no in-between, no inconsistency.

This is why all critical modifications to the structure are implemented as atomic operations. It also explains why order is important: before critical stuff can be done, preparations have to be finished and must be seen by all participants.

On Supercomputers

Supercomputers are an integral part in today’s research world as they are able to solve highly calculation-intensive problems. Supercomputers are used for climate research, molecular modeling and physical simulations. They are important because of their capacity as they provide immense but cost-effective computing power to researchers. It’s power can be split across users and tasks, allowing many researchers simultaneous access for their various applications. Supercomputers are also essential because of their capability. Some applications might require the maximum computing power to solve a problem – running the same application on a less capable system might not be feasible.

Supercomputers themselves are an interesting research topic and excite scientists of many branches of computer science. I myself have been working in the area of tool development for high performance computing, an area closely related to supercomputers. I therefore follow the current development in this area and even dare to speculate about what is going to happen next.

Recent Development

We have seen how the gaming industry and high performance computing stakeholders started working together to develop the Cell microprocessor architecture that is being used both for game consoles and supercomputers. Recently, a similar collaboration caused an outcry in the global supercomputing community when China finished their Tianhe-1A system that achieved a peak computing rate of 2.507 petaFLOPS making it the fastest computer in the world. It is a heterogeneous system that utilizes Intel XEON processors, Nvidia Tesla general purpose GPUs and NUDT FT1000 heterogeneous processors (I could not find any information about the latter).

While these computers are impressive, they are built in what I would call the conventional way. These systems are really at the limit of what is feasible in terms of density, cooling and most importantly energy requirements. Indeed, the biggest challenge for even faster systems is energy.

Upcoming Systems

Right now the Chinese system got the upper hand but IBM is building a new system in Germany called the SuperMUC (German). While using commodity processors, this new system will be cooled with water – a concept I suspect we will see a lot in the future. It is expected to drastically reduce energy consumption.

Another computer that is probably going to dwarf everything we have seen so far is Sequoia system by IBM. Utilizing water cooling like the German system, it is a Blue Gene/Q design with custom 17-core 64bit power architecture chips. The supercomputer will feature an immense density with 16,384 cores and 16 TB RAM per rack. As this system is aimed to reach 20 petaFLOPS it is a great step toward the exascale computing goal. Personally I would love to get my hands on a rough schematic of the Blue Gene/Q chip as it somewhat reminds me of the Cell processor: it also has 1 core to run the Linux kernel and a number of other cores for computation. What I would like to know is if the cores share memory or if they have dedicated user programmable caches that are connected via a bus.

The role of ARM

I’ve been wondering for a while now if ARM will try to get a foot into the HPC-market. ARM Holdings is a semiconductor (and software) company that licenses its technology as intellectual property (IP), rather than manufacturing its own CPUs. There are a few dozen companies making processors based on ARM’s designs including Intel, Texas Instruments, Freescale and Renesas. In 2007 almost 3 billion chips based on ARM designs were manufactured.

While ARM is considered to be market dominant in the field of mobile phone chips, they just recently started to break into other markets, especially servers and cloud-computing – also including high performance computing. Their new Cortex-A15 processor features up to 4 cores, SIMD extensions and a clock frequency of up to 2.5GHz while being very power-efficient. We will have to see how this plays out but I can imagine that for example the Exascale Initiative is very interested in something like this.

Your own embedded supercomputer (sort of)

A processor similar to the new Cortex-A15 is the OMAP 4 CPU by Texas Instruments. It is a Cortex-A9 dual core processor with SIMD extensions. While targeted at mobile platforms, this CPU is very similar to the upcoming high performance chip since it supports the same instruction set. There exists a cheap, open source development board for aforementioned Coretex-A9 called the Pandaboard. It sells for less than $200 and is a complete system that runs Ubuntu. In my opinion, this can be seen as single node of a high-performance but power efficient computer cluster.