“Stallman was right” is one of the important messages Eben Moglen conveys in his recent talks about Freedom and the Cloud. He’s a convincing speaker, his arguments are striking and the cause might very well be one of the most important challenges the Internet faces right now.

He speaks about privacy: the freedom to choose who to share what information with. He speaks about freedom of speech: the liberty to speak and communicate freely without censorship. He speaks about how we give up those freedoms, often without noticing or worse, without caring. By signing up to facebook. By using Gmail.

It’s insane if you think about it. We communicate with our friends, send them private messages, through a database in Northern California. We store our personal information including images in that same database. And while we put more and more information, more and more of our lives in that database, its owners are busy thinking about ways of how to make money with our data. By now I’m sure they have come to at least one conclusion: to make money they will share our data with entities we never intended to share it with.

It gets worse, and Eben Moglen elaborates this very well in his keynote speech at Fosdem 2011. All our data is routed through and even stored in a company’s database. We can choose to trust that particular company to not do anything bad with our data. But we can never be sure that the company will act in our interest if it is offered incentives (“We won’t shut you down if you comply”) to share certain data with certain government agencies. Since our data is stored in a centralized manner, agencies the likes of NSA would have a field day.

So indeed, Stallman was right when he called cloud computing a trap. He advises us “to stay local and stick with our own computers”.

Eben Moglen sees the urgency of these problems so he is taking action: informing the public with persuasive talks and gathering smart, ingenious and influential people to find solutions under the newly founded FreedomBox Foundation. The ingredients to the solution, in accordance to Stallman’s suggestion have been identified:

- cheap, compact plug computers that can be used as personal servers

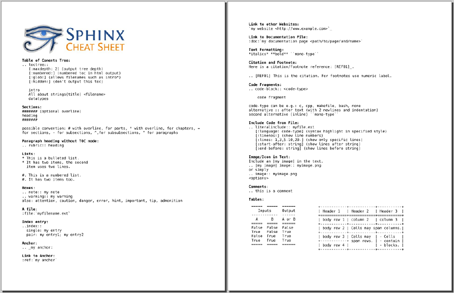

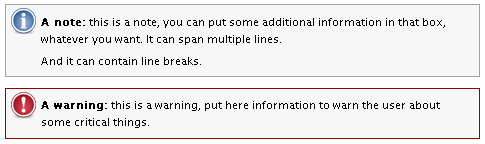

- a free (as in freedom) software stack that is designed to respect and preserve the user’s privacy

- mesh networking technology to keep the network alive if centralized internet connections fail

The idea is simple: replace all cloud services (social network, e-mail, photo storage etc) with distributed systems. The FreedomBox project aims to provide everyone with a compact, cheap, low-power plug computer. Such a device will come preloaded with free software that “knows how to securely contact your friends and business associates, is able to store and securely backup your personal data and generally maintaining your presence in the various networks you have come to rely on for communicating with people in society”.

There are a number of other projects out there that are worth mentioning here. A very interesting assessment of the current state of the social web and existing standards is the W3C Social Web Incubator Group report. The Diaspora project develops distributed and secure social networking software and is worth keeping an eye on. The FreedomBox community maintains a comprehensive list of existing projects.

I expect the foundation to develop a software package that provides stable system software and that encompasses a number of existing projects that allow users to move from cloud services to their own server. I will help were I can and so should you!